MMWormhole was released two days ago and the response has been phenomenal. It feels like many iOS developers who jumped into extension development were dealing with this challenge already, and this library seems to solve that problem for everyone. That's really great to see!

The main challenge that MMWormhole could not overcome though was opening the containing app in the background to deliver a message. Many developers requested this feature and to Apple's credit the WatchKit team has been very receptive. Today with the release of WatchKit Beta 2 we got -openParentApplication:reply: that will wake up your containing app in the background to allow it to handle a request. This is exactly what so many developers needed to build more innovative Apple Watch apps, so it's exciting to see Apple taking those requests seriously.

Given the timing of just releasing MMWormhole a few days ago, I was curious whether or not it had jumped the gun. Does this new API in WatchKit obviate the need for MMWormhole? I don't think it does. I think that the two actually work really well together.

One of the areas where we are finding MMWormhole to be so valuable is passing information to the extension that is used to setup the UI on the watch. That includes things like the title or date of a label, or the contents of table cells. If the wormhole represents a set of mailboxes, the messages passed to mailboxes on the extension are a great way to tell the watch app what it's UI state should be whether the watch app is awake or not. It can check the contents of those mailboxes when it wakes up and setup it's UI accordingly. This is a case that can be handled all sorts of ways, including NSUserDefaults or Core Data persistent store sharing, but I think this method of passed JSON or NSCoded messages is a simple and elegant solution.

The other area that MMWormhole tends to make more sense is passing large chunks of data between locations. Because (we assume) the openParentApplication method passes data via XPC, that implies data isn't being written to disk. MMWormhole now supports NSCoding and writes objects straight to disk, and only passes a notification to check the contents of that data to the other side.

Finally, there is the case of informing the extension of change from the containing app. This may be a relatively rare case, but I think it still bears mentioning. One example of this could be a network request on the containing app to download a list of items that need to be populated on the watch in a table view. Rather than frequently check to see if the contents of changed with a timer in the extension, MMWormhole can notify the extension of a change, and the extension can repopulate the table.

The way I plan to use these tools in the apps that I am working on is to use MMWormhole to pass information and updates to and from the extension, and to use openParentApplication to invoke critical commands on the containing app. For example, lets say that the watch app needed to trigger a background save request to update a web service, and that request needed to happen immediately because the user would expect that change to be reflected in real time. Or if having fresh data is essential to the experience on the watch, then waking the app up to provide that may be required.

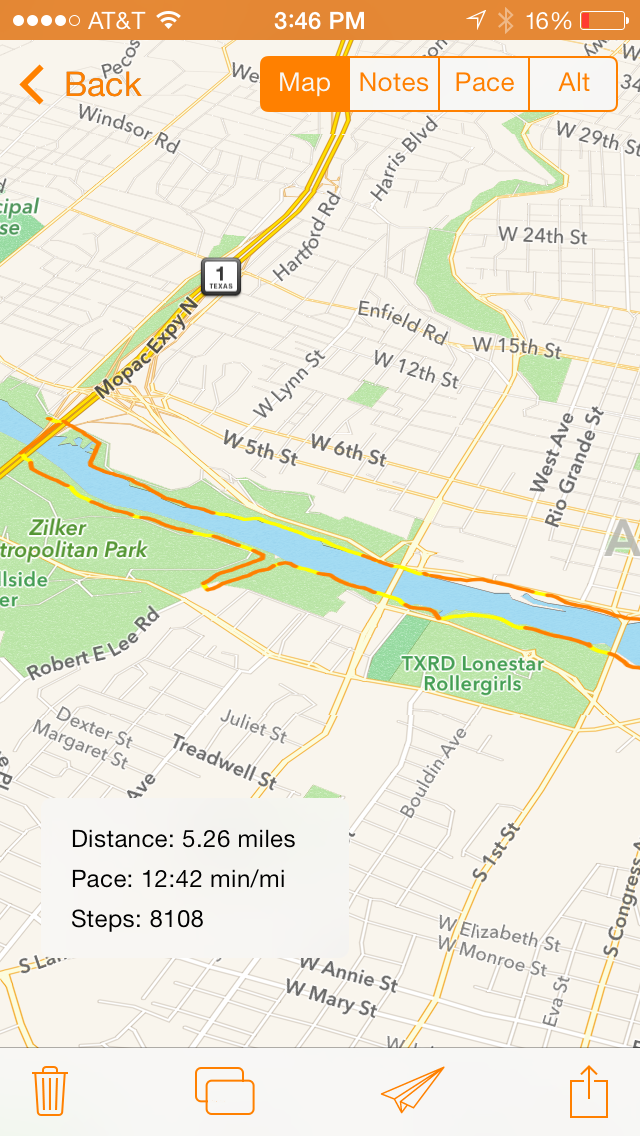

I think it's important that developers consider carefully when to open the parent application and when not to. Opening the parent app every time you want to transfer data is likely not a good use of this method. Using it to invoke critical commands or beginning a user-initiated background task, like location tracking for a navigation or fitness app, seem like great examples. Imagine having a runners stopwatch app that required you to pull out your phone to start the run, rather than just tapping a button on your watch. This API is a perfect way to provide that user experience. But you wouldn't really want to use the reply block to tell the watch how far you've travelled. That's where I think MMWormhole still provides a lot of value.

It's great to see this much progress on WatchKit already. If you haven't already, please file radars on WatchKit and participate on the WatchKit Developer Forums. There is a lot of exciting activity already happening there.

Update:

This thread on the WatchKit Developer Forums outlines the expected means of communication between the containing app and its extensions. The official response includes using using Darwin notification center to communicate with a running iOS app or a running extension. That makes me feel even better that MMWormhole will continue to be a big help for building extensions, because it directly addresses several communication scenarios for sharing data between an app and its extensions.