I was already well into developing Runtime when the iPhone 5s was announced and we learned about the new M7 "motion co-processor" from Apple. There have already been a few good articles talking about what the M7 does and how we believe it works, but essentially from a developer's perspective the M7 provides a great way to track a user's steps and type of activity while they are moving. Instead of writing about what the M7 is or how it works, I wanted to write about what its like to use as a developer.

The M7 API is part of the Core Motion framework. Tracking a users steps and activity has always been possible by using Core Motion, but it was much more difficult, and required much more power. Instead of trying to calculate this information ourselves using data directly from the accelerometer and gyroscope, we interact with two new classes that give us this data directly.

Steps

The first one, CMStepCounter, provides us with the number of steps the user has taken while carrying the device. There are only a few methods here. There's a class method to tell you whether or not the device supports step counting, aka whether or not the M7 is installed. There are two methods for starting and stopping step updates. And then there is a method to query the history of steps taken with a start and end date.

Lets talk about getting step updates first. While your app is running you can ask iOS to execute a block every time a certain threshold number of steps is reached. Runtime uses this method to update the Stopwatch screen while the user is running. From my experience, the updates are delivered about when you would expect them to be.

There is also a query method to look up the number of steps during a certain time frame. The M7 stores 7 days worth of data, so the window can be any period during that 7 days. The most surprising thing to me about this API was how fast it is. Querying even an entire week worth of step data takes virtually no time. Despite this, you still have the option to specify a specific queue to have the block executed on. You can specify the main queue if you want the callback to be synchronous. If you're going to be updating the UI with the result, you might as well just do that. If you're performing some other type of calculation with the result, then perhaps you might want to use a background queue.

Activity

Next up with activity tracking are two new classes, CMMotionActivityManager and CMMotionActivity. The activity manager follows the same pattern as the step counter, with a class method to determine availability, and block-based methods for updates and queries.

In this case though, the query and update callback blocks behave slightly differently. The query block returns an ordered array of CMMotionActivity objects. The activities are ordered by time based on when they occurred in the specified window of time. This is very similar to the new CoreLocation deferred location updates method, which returns a list of location updates in a similarly ordered fashion. The update callback block instead returns a single CMMotionActivity object, and gets called repeatedly each time the activity changes.

CMMotionActivity objects encapsulate what type of activity has taken place, be it running, walking, standing, driving, or an unknown type of activity, as well as the system's confidence level that it has correctly identified that activity. One thing that can be kind of funny when you start looking at the data is when you see an Unknown activity type with a low or high degree of confidence. That means that iOS is either sort of sure, or absolutely sure, that it has no idea what you are doing :)

One pattern I've noticed with the data is how it transitions from low, to medium, to high degree of confidence for something like walking or running. There tends to be about 5 seconds worth of low confidence, about 5 seconds worth of medium confidence, and then an extended period of high confidence if you maintain the same type of activity for a long time. Below is a screenshot of a test app I wrote to take a look at the at a being returned when running a query for activities over a certain period of time. Red represents low confidence and green represents high confidence. The period of time below is me shuffling through the throng of people at Circuit of the Americas after the US Grand Prix last Sunday, which is why its slightly chaotic.

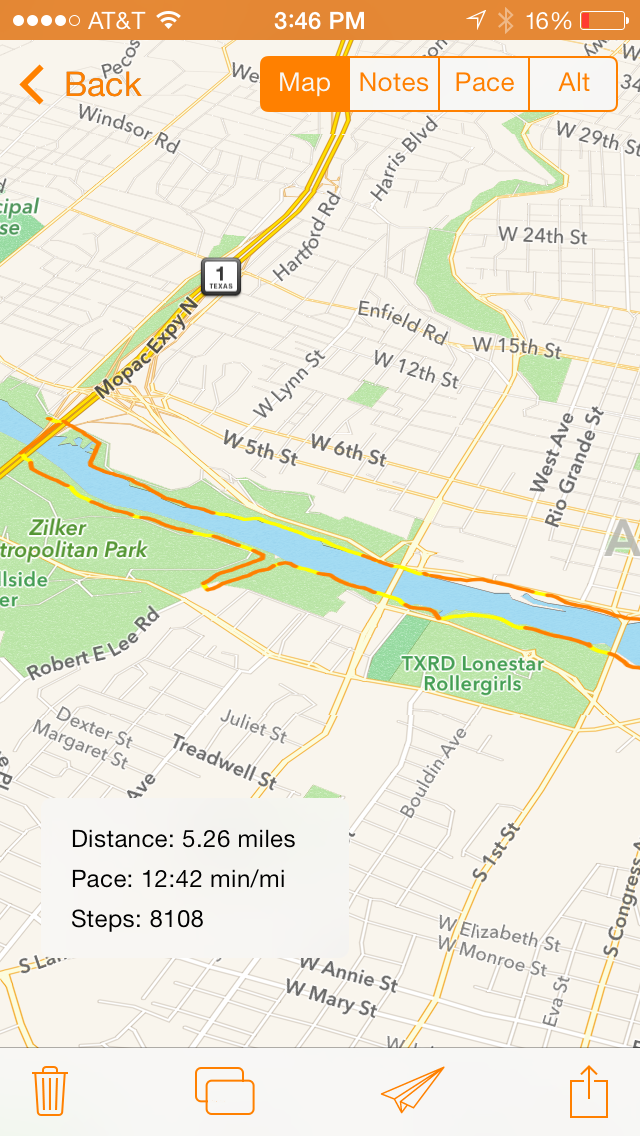

Overall I feel like the activity data is extremely accurate. I've tested it out pretty thoroughly with Runtime on a few runs here in Austin, and out in New York's Central Park. I've stuck with the low thresholds for running and walking, because even that seems to be pretty accurate for my needs. Here's a screenshot from Runtime showing the different activity types during one of my runs. The time I spent running is highlighted orange, while the time spent walking is highlighted yellow.

To build this feature in Runtime I used the query API, and simply query the activity type for the start and end time of a user's run. I can then iterate through the returned activities to determine how to highlight the route the user took out on the trail.

Conclusion

Both APIs are very nicely designed block-based interfaces. In some ways I look at this as the next evolution of Apple's API design patterns. A class method to determine whether or not access is available. Update methods with a callback block. And query methods with a callback block. They're very clean, functional, and easy interfaces to use.

The data also appears to be highly accurate. The activity detection in particular is basically dead on for distinguishing between walking and running. I think the accuracy may vary slightly based on how you hold your phone, but with it in my pocket or in an arm band I have noticed very high accuracy levels.

If you're considering adding support to the M7 to your app, hopefully this will help point you in the right direction. I think its great that more apps beyond fitness apps are beginning to use the M7. One example is Day One, the excellent journalling app for iOS and Mac, which lets you add your step data to your journal entries in their latest update. I desperately wish I'd had an iPhone 5s during my John Muir Trail hike this summer, so that I could have used this feature!

The M7 is a great new feature for iOS and something that can help build a better experience in your app by giving the user access to move information about their physical activity. Its a great new feature, and a fun API to use.